Artificial Intelligence (AI) in recruitment can be a double-edged sword. Used ethically, AI is a tool for good that provides powerful, bias-free data points. But in an unregulated industry, vendors are releasing ill-considered AI solutions that automate and compound human biases.

Vervoe’s Senior Product Manager Nicole Bowes recently hosted a webinar with David Weinberg (Co-founder, Vervoe) and Pramudi Suraweera (Principal Data Scientist, Seek) to discuss good AI versus bad AI in talent acquisition technology.

Understanding AI in recruitment

As AI technology becomes increasingly prevalent in recruitment processes, the onus is on people who use the solution to understand and demystify the technology, know how it works, recognize the ethical challenges involved, and learn what questions to ask when purchasing AI tech.

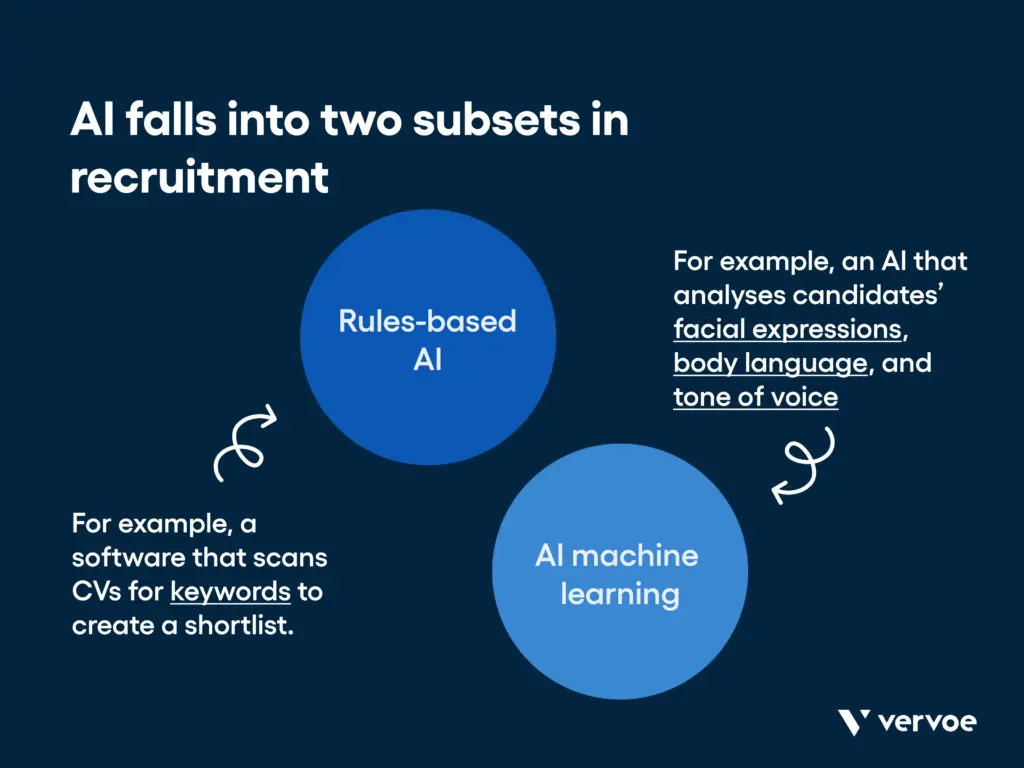

But what exactly is AI in recruitment? For Seek’s Pramudi Suraweera, AI falls into two subsets:

Rules-based AI

Rules-based AI involves very simple, rules-based engines that have been programmed by a human to respond in a specific way to a trigger. In hiring, an example could be software that scans CVs for keywords to create a shortlist.

AI machine learning

Significantly more advanced than AI, machine learning (ML) learns from data, compares it to known outcomes, and finds patterns to understand the things that happened to reach a result. Examples in hiring include ML models that learn from historical data what makes a good or bad hire in an organisation, or AI that analyses candidates’ facial expressions, body language, and tone of voice.

“The difference,” says Vervoe’s David Weinberg, “is that rule-based AI works by doing what a human has told it to do, while machine learning figures out what to do for itself.”

AI bias in recruitment

Machine learning is susceptible to human hiring bias introduced through the data set. “AI can be a tool for good, or it can perpetuate human bias and make it worse”, says David. “And that comes down to how the machine has been taught.”

Pramudi says machine learning has to be managed in a responsible way to avoid incorporating our own biases and sabotaging diversity hiring.

Webinar host Nicole Bowes shared three high-profile examples of “bad” AI in hiring processes:

- Amazon famously scrapped an AI recruiting tool that demonstrated bias against women due to being trained with 10 years of recruitment data that inadvertently taught the AI that men have been historically preferred over women in tech roles.

- Bavarian Broadcasting conducted a study wherein an actor was hired to answer the same questions on camera using identical facial expressions, body language, and tone of voice. She was then scored by face-scanning AI on traits such as openness, extraversion, and agreeableness. However, the results changed significantly when the actor wore glasses, wore a headscarf, or sat in front of a bookshelf.

- The Electronic Privacy Information Centre (a prominent rights group) filed a federal complaint in the U.S. against HireVue for using face scanning in its AI-powered recruitment software, citing unfair and deceptive practices.

While a human will take countless factors and emotions into account when making a decision, machine learning can only draw conclusions from the data it has been fed.

“One of the most important things with machine learning is making sure you only feed the computer data that it makes sense for it to learn from”

David Weinberg, Co-Founder and Chief Product Officer of Vervoe

“If you feed a computer images like those used in the Bavarian Broadcasting example, it’s drawing conclusions like ‘scarf plus glass equals correct answer’, or ‘scarf no glasses equals bad answer’. This may work perfectly on the data set as it was being created, but as soon as you open it up to the world it no longer works; the framework just crumbles. That’s a perfect example of bad AI.”

The goal, therefore, is to feed machine learning AI with a clean data set by removing all information that could contribute to subconscious bias.

“Unbiased machine learning is improved not by adding more to the data set, but by taking things out”

David Weinberg, Co-Founder and Chief Product Officer of Vervoe

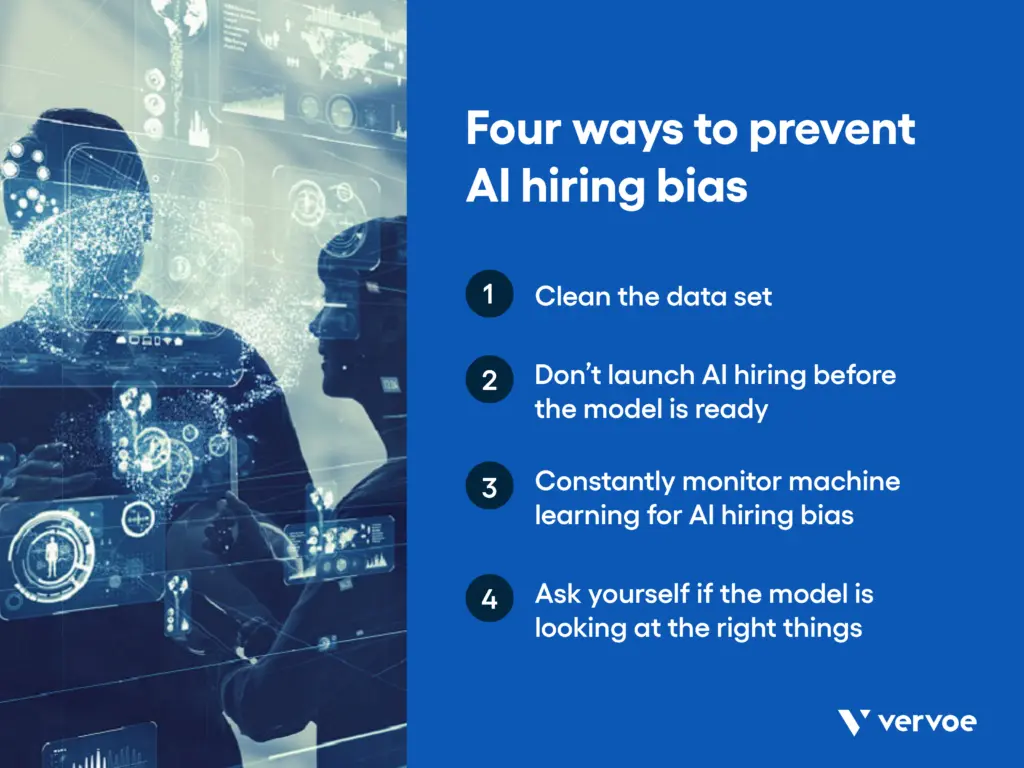

Four ways to prevent AI hiring bias in machine learning models

Pramudi and David shared several ways to prevent machine learning models from learning the wrong things.

1. Clean the data set

People may think that data scientists spend most of their time programming deep learning models, but the reality is very different, according to Pramudi. “Ninety percent of the job is spent working with the data; understanding the [nature of] the data, policing what data goes in, cleaning the data … only a tiny percentage of your time is spent on building learning models.”

For companies that scan CVs, for example, cleaning the data could involve removing names (ethnic bias), location (geographic or socioeconomic bias), gender (gender bias), date-of-birth (age bias), and so on. This process is known as blind hiring.

David agrees: “At Vervoe, we spent two years collecting clean data before building our machine learning model: two years of candidates completing assessments that were manually graded by humans to build up a data set.”

2. Don’t launch AI hiring before the model is ready

“You can’t just train a system and say ‘here it is!’ and unleash it on the world”, says David. “You’ve got to be cautious. The more exposure it has, the more it has learned, the more it’s proven itself, the more trust you can put into it. It’s just irresponsible otherwise”.

Seek has a similar approach. “We don’t just release something into the wild without thinking through the consequences”, says Pramudi. Sometimes we hold back on releases because we’re not comfortable or not ready. In some cases, we’ve even pulled stuff out after release.”

There should be several sets of eyes on the AI model. For the rules-based AI scenario such as chatbots, the questions, outcomes, and decision trees need to be audited to ensure the person who has built them has not subconsciously inserted preconceived ideas of what is good and bad.

Similarly, the data that goes into a machine learning model should have several people looking at it, or go through an external audit to ensure it is as unbiased as possible.

3. Constantly monitor machine learning for AI bias in hiring

Avoid taking a set-and-forget approach to machine learning. The model must be constantly monitored to make sure AI recruiting bias is not introduced.

Pramudi recommends testing the model by putting in known biases (like a gender bias) and seeing what it outputs. “Continuously test and monitor after going live, and gather continuous feedback from the people using it”, he says.

“Set-and-forget doesn’t work”, agrees David. “You can’t train your models on a data set that represents the whole world; you can only do your best to make sure it’s a really good starting point for launch, then move into active learning from there”.

4. Ask yourself if the model is looking at the right things

Getting machine learning right from an ethical perspective starts with being human. “We understand it’s a huge responsibility to develop a system that will help decide if someone gets hired”, says David. “We treat that really seriously and approach it as humans by questioning what the AI is judging candidates on. Would we want to be judged on these things? Would we want someone to judge us on our surname, or our gender, or how quickly we answer a question in an online assessment?”

As a new technology, there’s no real consensus in industry or academia in terms of AI recruitment ethics, AI hiring bias, and governance of data models. Seek has developed internal guidelines for evaluating and validating its processes and models from an ethos and bias perspective, and had these externally audited.

Questions to ask AI hiring solution vendors

What questions should you ask an AI hiring solution vendor? David and Pramudi recommend the following:

- Can you explain the technology to me in simple terms?

- Is it rules-based AI or machine learning?

- How will this save time in my job?

- How will this help me make better decisions?

- What data set is the machine learning model drawing from, where did it come from and how big is it?

- How long did it take to train the machine learning model?

- What are your responsible data practices?

- Is the model constantly monitored for introduced bias?

- How was the data set cleaned to remove AI hiring bias?

- What market (U.S., Australia, Europe, etc) was the machine learning model developed for?

- Will the machine learning model learn from my company and my context, or will it make the same decisions for every company?