AI now plays a major decision-making role in hiring and assists in several recruitment stages for many organizations. But with its increased influence, TA leaders and HR tech stakeholders are more pressed to prevent AI bias and avoid reinforcing existing inequities.

As regulations tighten and candidates’ expectations increase, talent acquisition leaders and hiring managers must be more accountable about evaluating whether their AI hiring tools are truly bias-free. This article clarifies what exactly AI bias is, how it arises in recruiting tools, and what steps organizations can take to address it.

Understanding AI bias in hiring

AI bias in hiring is unintended discrimination or sidelining that occurs when data and results from AI hiring tools favor certain groups. Mostly, it’s a reflection of the datasets used to train the software’s algorithm, which usually feature inequalities or incomplete representations, causing the system to reproduce those patterns.

Hiring bias shows up in various ways, whether it’s showing preference for candidate profiles that align with past hires, language, gender, or other demographic prejudices. Research shows that LLMs in particular are prone to bias and exhibit some form of unfairness regardless of the model.

With recruiters relying more heavily on AI and automated screening techniques, these biases prove increasingly dangerous, sidelining qualified applicants silently. Moreover, by eliminating capable candidates, biased AI poses legal and ethical risks to organizations and erodes candidate trust, further weakening their talent pools and credibility.

Already, 49% of job seekers in an American Staffing Association survey believe AI tools used in job recruiting are more biased than human recruiters. When AI screening amplifies societal inequalities instead of making hiring objective and efficient, its purpose is ultimately defeated.

4 types of AI bias in hiring

AI bias occurs in various forms depending on how a hiring system is trained, designed, and used. Understanding where each type comes from makes it easier to diagnose and fix these biases. These four types of AI hiring bias capture the most common patterns and how they shape hiring outcomes.

1. Data bias

Data bias occurs when the training data does not reflect the full range of qualified candidates, usually because the data is imbalanced or incomplete. AI learns and improves using historical patterns, which means any skews in the data translate directly into flaws in its results.

Data bias has two major types, namely:

- Historical bias: This happens when the real-world data used to train the model already contains inequalities or suggests unfairness. For example, a model trained mostly on candidates with years of experience may undervalue less-experienced candidates, despite being built correctly.

- Sampling bias: Sampling bias occurs when the data used to train the model doesn’t represent the full population of candidates the system will evaluate. As a result, the AI performs accurately for some groups but inaccurately for others. A system trained only on tech candidates, for instance, may not yield accurate results when used in other sectors.

2. Algorithmic bias

Algorithmic bias comes from design choices inside the model. Even with good data, weighting or scoring rules can create unintended preferences. How your model is built and what criteria it prioritizes can affect your hiring results negatively, causing poor hiring decisions and, eventually, bad hires.

A typical case is an algorithm that treats career gaps as a warning signal, sidelining parents returning to work or great candidates affected by layoffs.

3. Interaction bias

Interaction bias appears when users unknowingly influence how the AI recruitment software interprets information. After the system is deployed, recruiters may label or prompt it during use in ways that reinforce their own candidate preferences.

This, in turn, influences how it ranks candidates, even when the recruiter’s preference isn’t relevant to job performance.

Say a recruiter consistently rates candidates whose answers sound confident and assertive as top performers. Rather than being objective, the software learns from these preferences and prioritizes responses with similar tones, regardless of its relation to success in the role.

4. Feedback loop bias

Feedback loop bias occurs when the system’s own outputs influence future training data. If an AI hiring tool consistently recommends a narrow type of candidate, its next training cycle will reflect that narrow pool, causing the bias to compound. Essentially, feedback loop bias comes from self-reinforcing cycles, causing earlier mistakes from other bias types to be more pronounced.

5 causes of AI bias in hiring

Bias in AI screening and recruitment software often comes from a combination of multiple, sometimes underlying sources. Here are five common causes of AI bias in hiring to watch out for:

1. Resume-based models

Resume-based AI hiring software typically depends on surface-level metrics like job titles, keywords, and education when making predictions. This traditional approach may appear harmless, but it also subconsciously mirrors pedigree and access rather than true job potential.

Consequently, candidates with less flashy resumes are screened out, even when they may have higher proficiency, creating data bias.

2. Poor criteria and metrics

AI hiring systems can only optimize for what they are programmed to value. When hiring metrics are vague, outdated, or misaligned with real job success, the model capitalizes on those weaknesses, making them louder.

These bad inputs could be tenure, pedigree, or generic competencies instead of validated, role-specific skills. When the wrong metrics shape the model, it automatically favors candidates who match those flawed indicators.

3. Opaque algorithms

Opaque or black-box AI tools show little to no transparency about how the system makes hiring decisions and evaluates candidates. Where there’s hidden internal logic, recruiters have no way to discern which attributes drive scoring, why top candidates are ranked highly, or whether the model is treating groups differently.

Unsurprisingly, bias detection and compliance become difficult because organizations cannot trace decisions back to objective, defensible criteria. In other words, bias is more likely to persist and harder to correct when recruiters can’t inspect the system for flaws.

4. Psychometric or personality testing

Many psychometric models were not designed specifically for hiring and rely on general personality traits rather than job competencies. Because these tools generalize behavior without context, bias can easily enter into the decision-making process.

This is particularly common in customer-facing roles where extroversion is wrongly assumed to equate to a successful performance instead of skills like empathy, active listening, or problem-solving.

By using personality data to infer job fit, there’s a higher risk of drawing unsupported conclusions that have no clear relationship to actual performance.

5. Inconsistent audits and iteration

AI systems must be monitored and updated continuously, because their accuracy and fairness can drift over time. Failing to audit models makes organizations lose sight of changes in data patterns, demographic shifts, industry changes, or even software updates.

When these changes go unchecked, biases develop and compound, making the system more unfair and tampering with hiring data.

How to fix AI bias in hiring

To ensure bias-free hiring, you must implement proper input and maintenance processes with your AI recruitment software. Below, we’ll discuss four actionable steps you should take for fair, accurate AI screening.

1. Use skills-based evaluations

Skills-based hiring focuses strictly on demonstrated skills and real abilities when grading and ranking candidates. While resume-based models screen keywords that could propagate bias, skills-first models are trained on validated performance outcomes from job-relevant tasks.

This directly reduces bias because the AI bases decisions on evidence of what candidates can do, not who they are or their background profiles. It also creates a more defensible hiring process by tying recommendations to objective, measurable criteria.

Here’s a checklist for implementing skills-based hiring:

- Identify the core skills that directly predict success for each role.

- Use job tasks or practical assessments instead of resume filters.

- Ensure assessments measure real performance, not personality or background.

- Validate that scoring criteria align with actual job outcomes.

- Confirm that all candidates complete the same tasks under the same conditions.

2. Prioritize transparent, explainable algorithms

Clear knowledge of how your model works is essential for responsible use. To continuously inspect and defend your AI’s scoring and hiring decisions, you need software that provides explainable, transparent AI with easy-to-read documentation.

Having a transparent model allows you to identify what metrics your AI prioritizes when evaluating candidates, which helps you spot disparities early. This strengthens compliance, supports fairer decision-making, and allows you to rectify any unpredictable issues on time.

Follow this checklist to evaluate transparency in AI tools:

- Ask vendors to show exactly which inputs influence candidate scores.

- Confirm that the platform can provide clear reasoning behind every recommendation.

- Ensure the vendor publishes its fairness, data usage, and model training practices.

- Verify that explanations are simple enough for non-technical teams to understand.

3. Supplement the AI shortlist with human decision-making

AI was designed to assist recruiters in making decisions, not to override, or worse, completely replace human intelligence and intuition. After using your AI model for initial assessment and screening, as a recruiter, you need to review recommendations, interpret nuances, and apply context that falls outside structured data.

With this balance, your hiring process benefits from both AI’s speed and efficiency, and human supervision and creativity. By keeping experienced recruiters in charge of the recruitment cycle, you reduce the risk of automated bias going unnoticed and maintain accountability.

Here’s how to balance automation with human judgment:

- Review AI shortlists to ensure they align with validated job requirements.

- Ensure that humans make the final hiring decision.

- Add structured interviews or hands-on tasks to confirm AI findings.

- Document reasons for advancing or rejecting candidates beyond AI scores.

- Train recruiters to identify and counter their own biases.

4. Continuously audit models

AI systems don’t stay static; their accuracy and fairness change as data, roles, and workforce patterns evolve. Regular audits ensure the model is still performing the way it was designed to and hasn’t drifted into unfair or unreliable territory.

Implement routine checks to confirm that the system’s recommendations remain aligned with real job performance, and maintain compliance as regulations shift. Consistent monitoring keeps your system trustworthy, predictable, and defensible.

Here are a few tips to help you get started:

- Conduct regular reviews of hiring outcomes across demographic groups.

- Monitor for changes in accuracy as job needs evolve.

- Reassess training data to ensure it reflects current workforce realities.

- Update scoring criteria when roles, tools, or success metrics change.

- Ask vendors to provide periodic fairness and performance reports.

Fix AI bias in hiring with Vervoe

The software you choose determines whether your AI reduces bias or reinforces it. Tools built on opaque models and outdated criteria quickly affect the fairness and quality of hires. Simply put, getting this decision right is essential for any organization adopting AI responsibly.

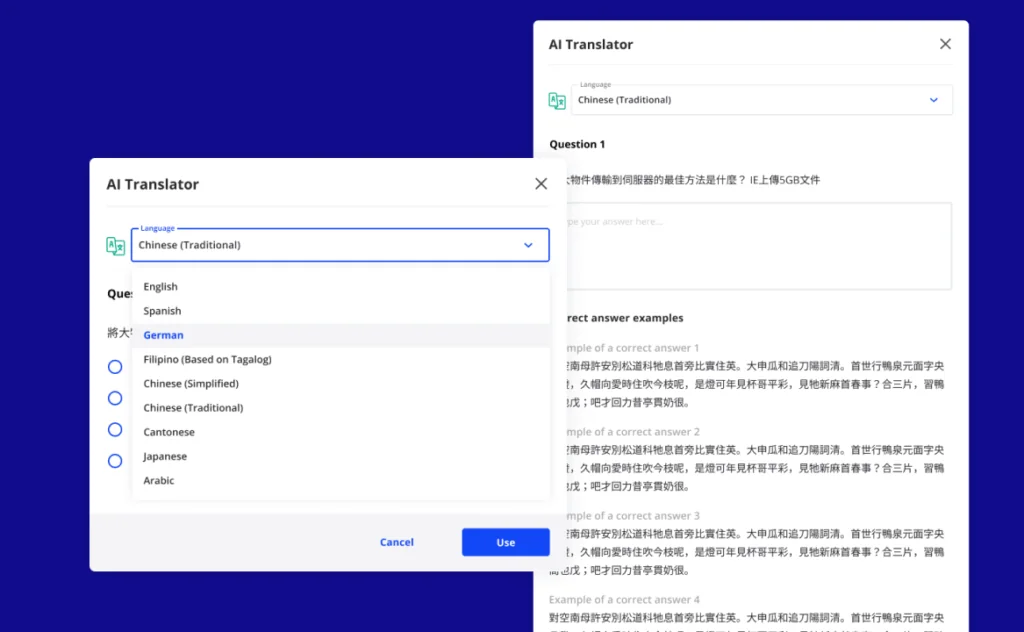

Vervoe, our AI recruiting tool, is perfect for teams that prioritize hiring accuracy and transparency, built on a fully explainable algorithm that shows how skills influence performance. Grounded in job-specific abilities, our platform gives you precise grading, scoring, and analytics for data-backed, reliable hiring. Vervoe’s validated assessment library is relevant across sectors, with 300+ skills tests covering numerous roles from sales to finance and tech.

Experience Vervoe’s ability to make your hiring fairer and stronger. Schedule a demo and enjoy nonstop hiring accuracy today.