If you use machine learning and artificial intelligence (AI) tools in recruitment, you likely do so to remove bias and find high-quality hires fast. But what if the very tool that was meant to help was hurting instead?

In 2014, Amazon developed a resume screening tool to help them find good talent fast. A year later, they canned it. The tool showed bias against women for technical roles. This meant that even if someone was the best developer in the world, the system would pass them over if they were a woman.

But the risks go beyond losing brilliant talent alone. Unfair hiring can affect your brand reputation and cause up to $300,000 in punitive damages.

This article will help you understand and distinguish between unfair and fair AI in recruitment. That way, you’ll be able to build an inclusive recruitment process and net higher-quality candidates.

AI hiring systems in recruitment so far — the good, the bad

Before AI, recruiters relied on employee referrals, printed ads, and other low-tech means to hire outstanding candidates. Things changed in the 1990s when the internet arrived.

People began to use the internet to showcase their candidate profiles, and hiring managers could post job openings online. This was the beginning of AI in recruitment, with resume screening tools taking the lead.

Recruitment AI has come a long way since; now including video screening tools, chatbots, and more. Here’s how that has worked out so far.

Good uses of AI in hiring

Here are some examples of good uses of AI in hiring…

iSelect gave every candidate a chance to prove their skills

“It [Vervoe] eliminated bias, and we saw huge improvement straight away. Candidates were now being assessed purely on their skills. We now only look at their CV after the hiring decisions have been made, which is a huge step forward for us.”

Fiona Baker, Recruitment Manager

iSelect, an Australian insurance comparison site, had trouble retaining sales consultants past the 6-month mark.

With the help of Vervoe’s AI-powered platform, iSelect ditched screening resumes in favor of skills assessments at the first stage of hiring. Baker says that the assessments helped improve the quality of candidates who showed up for interviews. They were informed, prepared, and had already proven their skills. Most of all, the assessments eliminated bias and leveled the field for all applicants.

L’Oreal found great hires who wouldn’t fit the regular profile

“We have been able to recruit profiles that we probably wouldn’t have hired just on their CV. Like a tech profile for marketing, or a finance profile for sales.”

Eva Azoulay, Global Vice-President of Human Resources

Beauty company, L’Oreal used AI to save time, screen for quality and diversity, and improve the candidate experience.

Azoulay says they saved 200 hours screening 12,000 people for an 80-spot internship. And the group turned out to be the most diverse hires they’d ever made.

Bad use of AI in hiring

Ad algorithms limit who sees gender-neutral ads based on gender

“We also found that setting the gender to female resulted in getting fewer instances of an ad related to high paying jobs than setting it to male.”

Amit Datta, Michael Carl Tschantz and Anupam Datta, Researchers

While looking into web tracking, researchers found that women saw fewer ads for high-paying jobs while they browsed the web.

Researchers of this case study noticed this when they set the gender to female in ad preference settings. For companies who ran job ads, that means they got less exposure to potential candidates who were women.

Different types of AI tools and how they work

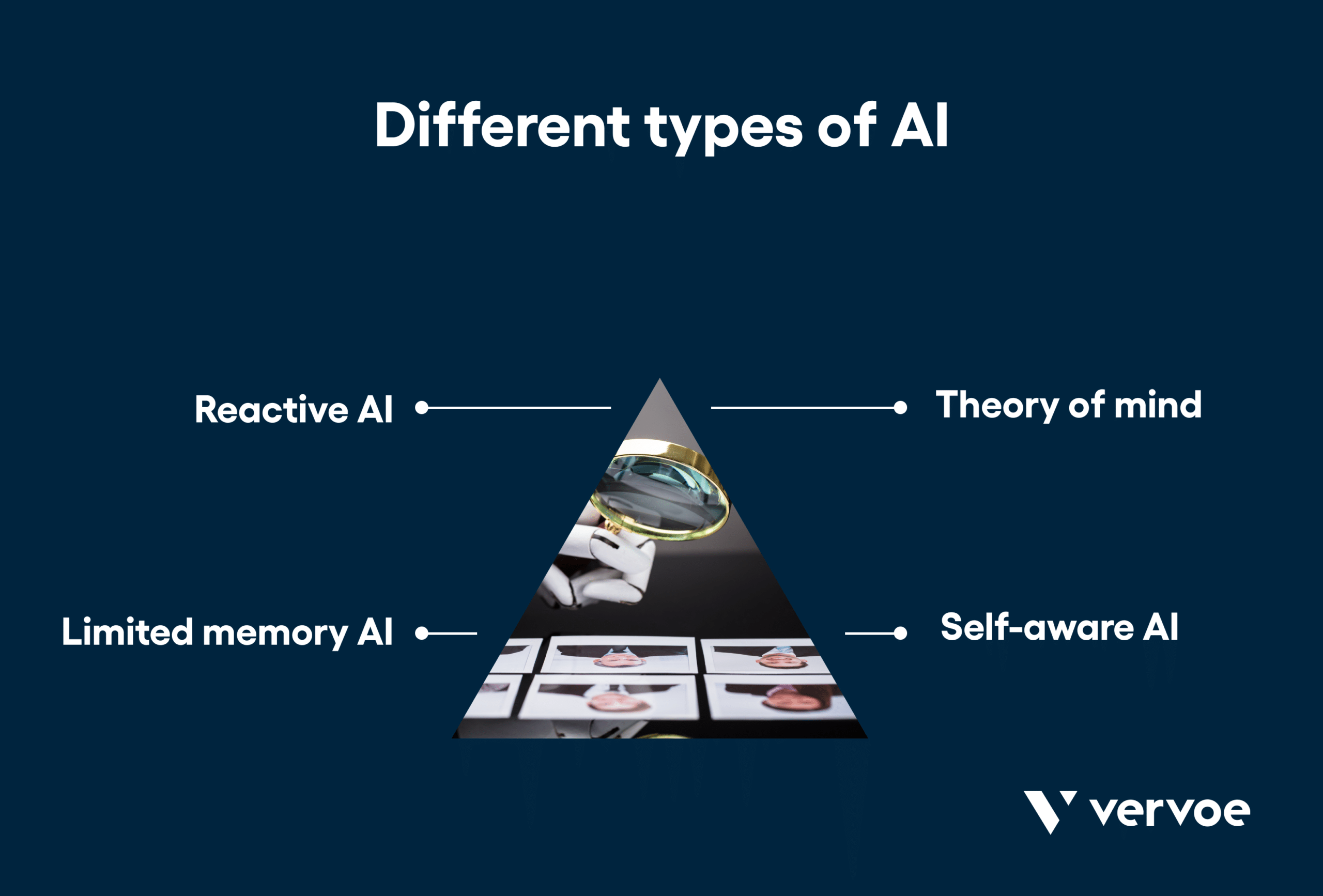

There are four main types of artificial intelligence, and each is different based on how they learn to mimic human activities.

Reactive AI

Reactive algorithms allow machines to perform tasks based on pre-defined instructions. The programmer uses if-else statements to create their functions.

Example:

If candidate X graduated from college with a Y grade, add them to the screening file.

This type of AI cannot decide and only do what we tell them.

Limited memory AI

Limited memory AI relies on past real-life examples (called models) to function. Programmers use these models to teach the software how to behave.

The Amazon screening AI was a limited memory AI. It depended on Amazon employee profiles to understand what ideal candidate profiles should look like. In that case, resumes of “good candidates” didn’t include the word “women” — like in “…women’s club” for example.

Theory of mind

Theory of mind AI uses emotional intelligence and psychology to make informed decisions.

Most video interview analysis tools fall under theory of mind AI. They analyze the candidate’s face, emotions, tone, etc. to draw conclusions.

Self-aware AI

This is the AI “super intellect” realm. Self-aware AIs have their own consciousness. They think, learn, and evolve all by themselves. But AI creation isn’t at this self-aware stage yet.

The difference between good AI and bad AI

What makes good or bad hiring AI comes down to one thing; data. AI can only draw conclusions from looking at commands and examples we have fed it. Or from patterns it observes. Who and where is the data behind your recruitment AI from?

Data quality

As the saying goes, “garbage in, garbage out”. If the historical data used to create rules and train models is flawed, your AI will be flawed as well.

Let’s say a set of pictures, documents, or information tells your AI tools what a good or bad salesperson looks like. If there were significantly more women than men in the training data, the AI becomes biased against men.

Good AI is AI that is fed diverse training data in the hiring process.

Development team

Humans have inherent biases. Unfortunately, it’s people who train AI tools, which can result in AI inheriting that human bias.

It’s less likely for a non-diverse team of engineers and developers to spot bias in training data for artificial intelligence. They may have an unconscious bias against certain groups and there’s no one on the team to call that out and query these fairness related metrics.

Good AI development will at least involve third parties from different socio-economic and psychological groups. The development of good AI and machine learning is transparent and inclusive, whereas bad algorithms are segregated and closed.

The benefits of using fair AI in recruitment

Beyond saving time and increasing productivity, fair AI eliminates bias and boosts the quality of hires.

Eliminating bias

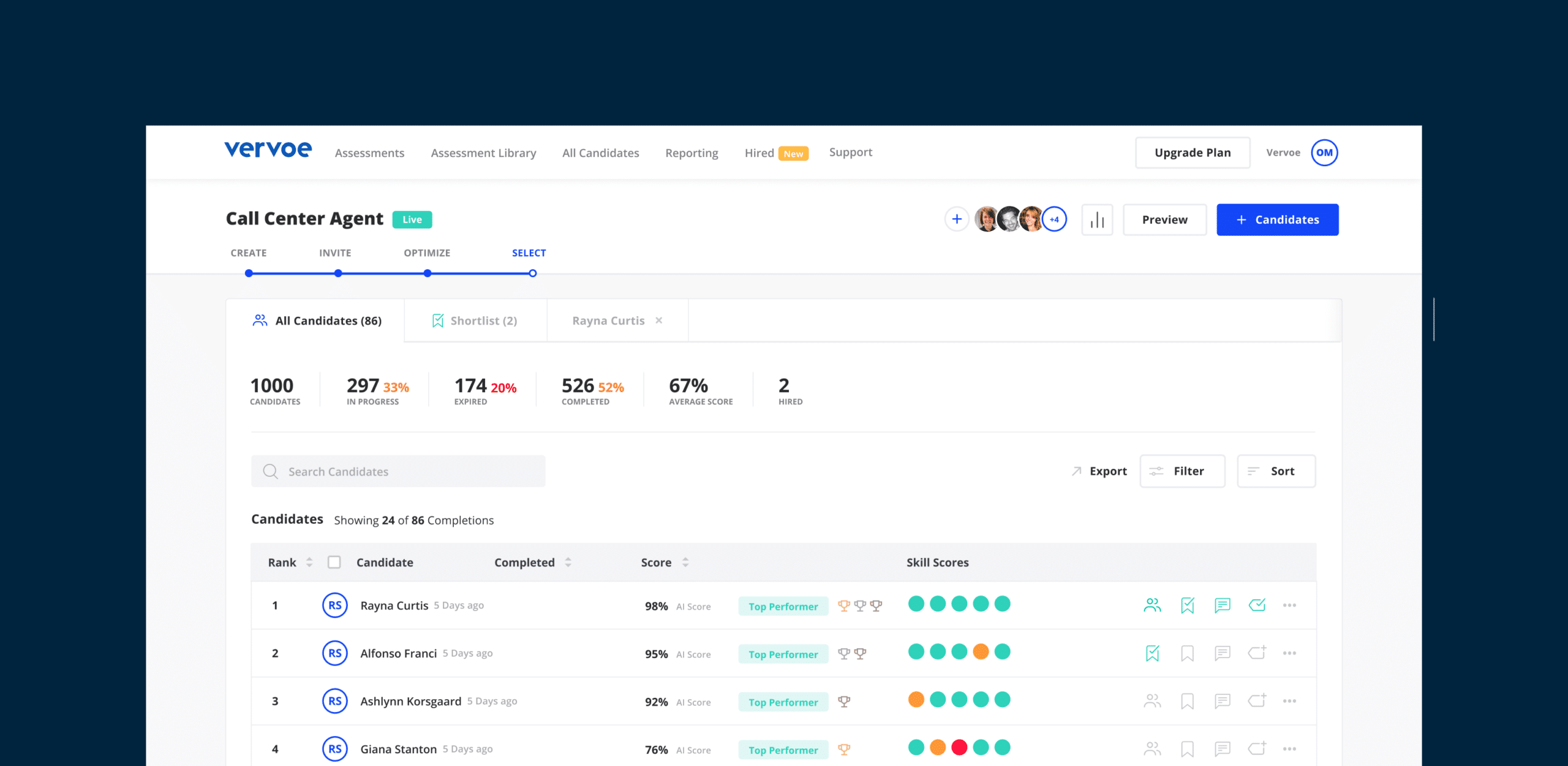

Fair AI will allow your team to look beyond irrelevant details like current employment status, career gaps, e.t.c. If you use an AI like Vervoe, which focuses on skills assessment, you judge candidates based on proven skills alone.

Lots of research points to the disadvantages biased hiring brings to organizations. Oregon State University found that even small amounts of bias in hiring can cause huge financial losses. For a company that hires 8,000 new people per year, a 1% increase in gender bias will lead to a $2.8 million loss in productivity per year.

Boosting quality of hire

Good AI allows you to judge candidates based on their skills. This means that you’ll be hiring more people who can do the job rather than just talk like they can.

Also, fair AI recruiting tools use machine learning to address skills adjacency. Say a candidate has a non-related skill. Fair AI in the recruitment process can figure out the tiny skills that make up that skill to find job-relevant abilities the candidate might have. This is something humans might miss.

With iSelect for example, AI helped improve the quality of candidates who showed up for interviews.

How to ensure your recruitment AI tools are fair

Unfair AI can lead to poor quality of hires and lawsuits. Here are three things to look out for in AI recruitment tools — according to Delft University of Technology research:

Accountability: Does the team justify and explain the actions of the AI tool?

The team behind the AI tool should be able to explain how the AI decides. They should also be able to justify the assumptions behind their design rules and models.

For example, developers may assume a particular college majorly influences performance in a role. If they code that assumption into the AI, the system will prefer candidates who have that major. To justify this assumption, the team should be able to provide relevant research or statistical evidence when you query fairness related metrics.

At Vervoe, we have an open page describing how we create assessments and ensure fairness in grading.

For decisions, the tool should give explanations as well, or else it may have hidden bias.

Responsibility: Are they actively anticipating and mitigating negatives?

Responsibility in hiring artificial intelligence means active anticipation, reflexivity, inclusion, and responsiveness.

In fair AI, the developers will actively anticipate the limitations of technology. A lack of empathy, or recognition for soft skills, for example.

They will also plan to control risks or negative possibilities. With candidate scoring, for example, the system will have ways to override scores.

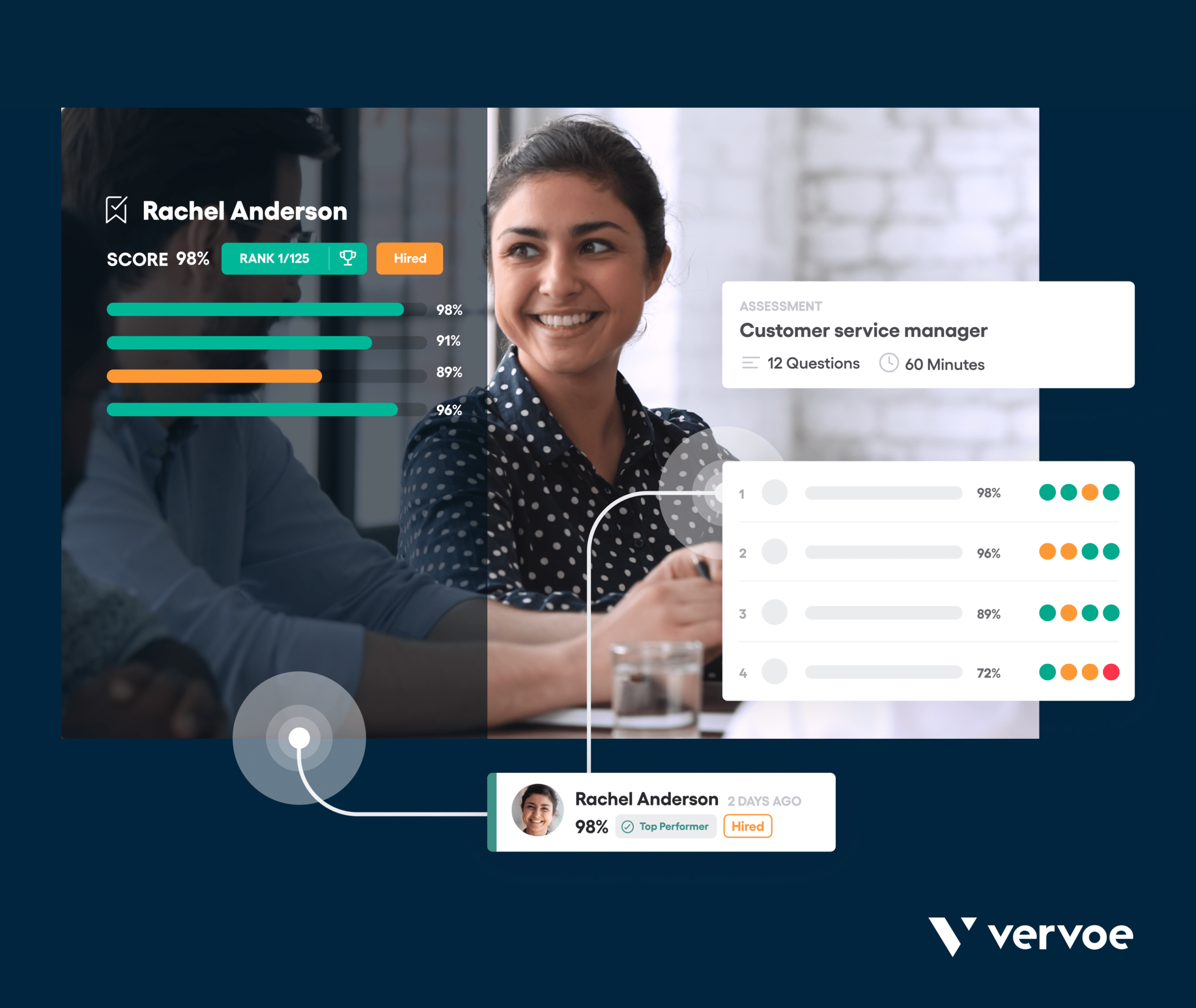

Here at Vervoe, our AI gives a “predicted score” based on learned grading preferences (yours). But you can always view answers and grade a promising candidate manually to confirm the AI score.

The team will constantly reflect on the purpose, risks, strengths, and limitations of their AI systems. That way, they can understand the system and help organizations using it avoid potential unfairness.

The data behind the AI technology will have an equal representation of people from diverse backgrounds to avoid bias. Also, look out for diversity in the development team itself.

Finally, responsible AI recruitment tools must respond to changes in society and technology. They must adapt to include changing inclusion policies, demographics, and the evolving nature of work. The team must commit to periodic data revisions and retraining as well.

Transparency: Is the training data accessible?

To ensure fairness, the training data of the AI recruitment tools should be open to the public and verifiable by third parties. The code, data, and purpose should be legible so people can understand how it works.

The team also needs to provide explanations about how the system works. At Vervoe, we have several pages explaining how our machine learning AI works, including our AI FAQs page.

Create fair hiring processes with Vervoe

62% of companies have suffered revenue loss thanks to biased AI. Lawsuits, brand reputation damage, employee attrition, and low employee productivity are just some of the effects.

With Vervoe, you don’t have to suffer loss. Vervoe employs consistent, objective, merit-based assessments that give each candidate the opportunity to perform.

Vervoe’s AI minimizes bias with anti-cheating features like question randomization. Activating this feature means applicants view assessment questions in a new sequence every single time. And you can create assessments in as little as 30 seconds.

To learn more about Vervoe’s features and how our AI works, request a demo today. We are accountable, responsible, and transparent with how our system works.