Why we’re writing about facial analysis in hiring

You’ve probably read about the use of facial recognition – also known as facial analysis or expression analysis – in hiring. In this article we’re going to explain what facial analysis is, how it’s used in recruitment… and why we’re against it.

That’s right, at the risk of alienating some of you, we’re taking a strong position against the use of facial analysis in hiring.

Why facial analysis exists

Recruitment is hard. The administrative tasks of sourcing, pre-employment screening, interviewing and selecting candidates requires an enormous amount of work. When you’re under pressure, you need to find ways to cut the candidate pool to hire fast.

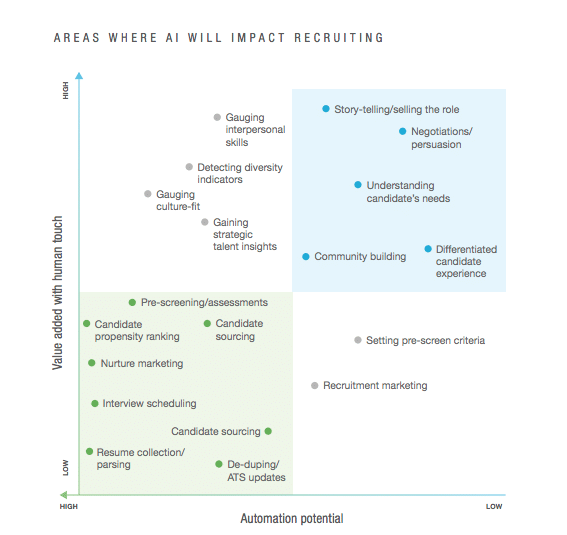

So, out of necessity, software providers and internal processes have come along to lessen the work. We turn to Artificial Intelligence. Machine learning. Automation. A combination of them all.

There’s a huge appetite for a whole range of AI solutions in recruitment. The 2018 LinkedIn Global Recruiting trends report found 67% of leaders surveyed embraced AI because it would help them save time.

One of these solutions is facial recognition or facial analysis in hiring. Now, video interviewing has been around for quite some time. There are ways that AI tools can be applied to review video at scale. At Vervoe, we have a few tricks up our sleeve for this. But the addition of facial recognition isn’t one of them, and we’re going to explain why.

First, we’ll break down the two kinds of technology we’re talking about.

What is facial recognition?

Facial recognition technology can tell if there’s a face in an image or video. In general, the algorithm behind it has been given a lot of faces to look at as a database, and compares the subject image to that database to recognise facial features.

We’re used to using facial recognition day-to-day:

- You look at your smartphone and it unlocks: facial recognition.

- Facebook suggests a photo you might be tagged in: facial recognition.

- You add a puppy face to your Instagram story: facial recognition.

Facial recognition is used to keep us safe in public spaces, like airports, rail stations, stadiums and cash points. And there are some exciting medical applications for facial recognition. Then, there are some creepier and sinister applications in surveillance and law enforcement that we won’t go into here.

In recruitment, facial recognition is usually used for proctoring and identity verification.

What is facial analysis?

Facial analysis doesn’t just identify a face. It tries to analyse a person’s emotion, intention, cognitive process or physical effort based on visual information. The software might take into account context, body gesture, voice, individual differences, and cultural factors as well as by facial configuration and timing.

In recruitment, this technology is being applied to the candidate screening process. Coming back to the need for efficiency, it seems like a nifty application of AI to get through volumes of applications. One quote has said this tech is reducing the hiring process by 90%.

Magic, right? Getting to know someone’s personality and behaviour through video? And super fast? Amazing! Vervoe, why aren’t you doing this?

Why facial analysis is imperfect

Facial analysis systems are pretty good at detecting facial movements. They can tell the difference between someone smiling and someone frowning. But they can be imprecise when it comes to interpreting what those movements mean.

“Facial expressions vary by culture and also by context,” comments I/O psychologist Orin Davis. “The latter is particularly important in an interview. We are continuously reading our conversation partner(s) and updating our responses (and facial expressions) in real-time.

“For instance, if you start to make a joke during the interview with a human being and you get a cue that the joke will fall flat (for instance, if it requires trivia that the person appears not to have and the person looks puzzled), you can direct your line of conversation away from the joke. With a video interview, you are simply monologuing to a camera, which has no relationship whatsoever to how we react in front of people.”

Lisa Feldman Barrett, a neuroscientist who studies emotion, commented on the example of “scowling”: “A computer might see a person’s frown and furrowed brow and assume they’re easily angered,” she said, “a red flag for someone seeking a sales associate job.”

“But people scowl all the time when they’re not angry: when they’re concentrating really hard, when they’re confused, when they have gas.”

Davis adds, “It’s important to keep in mind that there are very few universal facial expressions, and all of them are basic (e.g., happiness, sadness, disgust). None of that comes even close to approximating actual lasting attributes of a person – one would be better off analyzing the content of the words alone, like an essay.

“Humans have a hard enough time figuring these rules out, and there’s no way we can transmit them to computers when we don’t understand them ourselves.”

The confusing landscape of the human face aside, we need to consider how these interpretations are being used in recruitment.

What the facial analysis results are usually matched against are personality and culture fit profiles. This will tell you whether a candidate is excited, stressed, confident or calm, and how well they match your organisation’s “face”.

We’ve already covered why personality tests suck in hiring, so to keep this brief: Easy to misinterpret. Used out of context. Biased. And most importantly, not predictive of employee performance.

The other thing is that by matching a face to a personality profile, you’re perpetuating (worse, scaling) the flaws in traditional hiring. That’s making recruitment decisions based on chemistry, mood or some other context rather than skills for the role. You’re making decisions based on bias. Using facial analysis in hiring to find a good personality fit is just doing the same thing, but faster.

How facial analysis perpetuates bias

Remember that LinkedIn Global Recruiting survey mentioned before? In that, 47% of HR leaders said they looked forward to AI helping them combat bias.

In order to be bias free, the AI technology needs to get a lot of facial data. And that data needs to be diverse. If not, there could be unintentional discrimination toward people whose faces read differently that the general population. Some examples might include racial or cultural background, or people with facial injuries. Things that have nothing to do with how a person performs in a role.

With a narrow data source, you risk building an algorithm that consolidates, perpetuates and potentially even amplifies existing beliefs and biases.

Which is what most of the facial analysis vendors in the recruitment world do. They model the preferences of an organisation based on high performers that are already in the organisation. Then the responses are matched, as one vendor quoted, against a “benchmark of success” from past performers, like if they’d met sales quotas, or resolved customer calls. This biased method leads to hiring more of the same people you already have.

We recently wrote about bias (actually, it’s something we write a lot about). In the article, one of our experts, Orin Davis, highlighted the risk of “cloning error”, where businesses seek to replicate their top performers. They use their star employees’ profile as the standard for future hires.

“People assume that a person who was successful in a role has a measurable set of personality characteristics and strengths,” says Davis. “As such, replacing that person requires finding someone just like them.”

So? Don’t you want thousands of your top performers wandering around the office?

What makes one employee successful doesn’t necessarily make another successful. We know diversity gives you greater access to a range of talent. A diverse workplace gives you broader into your customer’s needs and motivations.

Diversity makes your organization more effective, more successful and more profitable. Narrowing your view with facial analysis technology doesn’t lead to a diverse workforce.

And using facial analysis in hiring could be driving diverse applicants away from your business.

Candidates don’t like facial analysis

Candidates are worried about AI video interviews. “They want to know they’re being fairly assessed for their ability to do the role, not how they express themselves while answering questions,” explains Stacie Garland from the Vervoe Behavioural Science team.

So, candidates have turned to trying to game the system. Maybe you’ve come across some recent articles about cram schools in Korea dedicated to training people how to beat them.

Online courses have been set up to advise job applicants how to beat the AI overlords. Hundreds of people hang on advice like, “Don’t force a smile with your lips, smile with your eyes.”

There’s nothing wrong with being prepared for an interview. Researching the company and reflecting on your skills are important. Practicing and learning for the next role is vital. But only if it’s related to the role. Practice writing code to write better code. Practice designing websites to design better websites. Practice writing content to write better content.

How is practicing smiles helpful to anyone?

Our stance

Vervoe doesn’t do facial analysis. We never will.

“If you wanted to know how good Roger Federer was at tennis, you wouldn’t have a coffee and chat with him, you’d ask him to hit a ball.

“We get applicants for a design role to actually design something. We get financial analysts to examine records in a spreadsheet. We get sales reps to write a cold email.

“We really want to level the playing field by making the choice simply about how well you can do the job and contribute to the organisation.”

Omer Molad, Co-founder and CEO of Vervoe

Facial analysis technology doesn’t help you hire based on merit. What matters most in making hiring decisions is understanding which candidate can actually perform the role they have applied for.

You can start screening candidates based on skills with a free trial of Vervoe. Click here to get started.